April Update: The Pleasantries and Pitfalls of UE5

Well, I made the leap from Unreal Engine 4.x to 5.1! The visual improvements are wonderful and it only hurt some of the time!

Let’s get into how it went down and leave a few tips for anyone out there making the move themselves.

The Easy Part

Honestly, making the move over to 5.x strictly-speaking was about the easiest affair one can imagine. Kudos to the team at Epic for making things so portable. If you want to follow along with your project, here are the steps:

How to Migrate Your Game to UE5

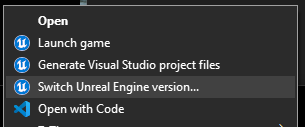

1. Right click on your project and choose “Switch Unreal Engine Version…”

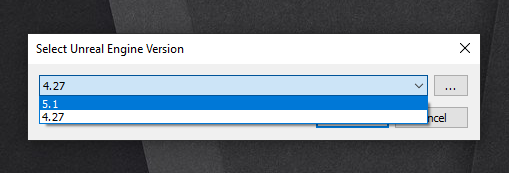

2. Choose a version that starts with the number five.

That’s it. Really. Open your project now, let shaders and whatnot re-compile, and you’re officially migrated to UE5.

Once you’re in the door, however, you want to start playing with the new tools, right? Right. You want next-gen global illumination, you want impeccable cast shadows, and you want it all on crazy-heavy geometry so that you never have to retopologize or author LODs or pack lightmap UVs ever again, and now making games will be easy because that’s what all the tech demos told us, right?

Eh, they didn’t quite say that.

Three weeks in I can confirm that tech like Lumen and Nanite are indeed as incredible as they say they are on the tin. But like any tin there is a ton of fine print written on the back — it’s there, but definitely played down a bit — and you’re going to want to get acquainted with those details and caveats before you dive in head-first, dear indie game dev.

The Hard Part

The holy trinity of UE5 rendering tech — what I set out to get going in this game first once I migrated over — are Lumen, Nanite, and Virtual Shadow Maps. These three tools work together to enable the loudly-touted win of achieving sophisticated real-time lighting in scenes with geometric complexity that would have been simply unimaginable a year ago.

Getting them up and running was surprisingly easy, just a question of knowing where the checkboxes were. Which is to say — it was really easy to kill the performance of my game.

Not even just my game, but the editor at large. I had intentionally skipped UE 5.0 and waited for a later point release in part because this game is very foliage-heavy (Nanite didn’t support foliage at the time), and in equal part because I figured any huge technical leap like what we were seeing in 5.0 naturally needs some time to mature. On 5.1 I was getting crashes to desktop every fifteen minutes — could the engine really be that unstable, over a year after being released to the public?

After a few days of digging into all manner of profiling tools and taking some stabs in the dark, I came to realize the answer to that question is “no.” UE5 seems sufficiently stable, I was just murdering my hardware with all this new fancy tech. In particular — Lumen on Epic settings seems to have been demanding more VRAM than my RTX 3070 Ti could provide (8 GB), and eventually it would overrun and just crash out.

Uh oh. If my own dev machine can’t run my game, who the heck else will be able to play it?

There was clearly a lot to learn, so I dove into each of the three new systems in turn.

Lumen

Epic’s new global illumination solution is, of the three, probably the most impactful in terms of sheer user satisfaction. It makes an immediate, noticeable impact on every pixel in the scene. You throw in a lamp and it starts bouncing light around in natural ways that respond to the complexity of your geometry, in real time, with no baking or special UV considerations. In some ways it feels like the next-gen version of the experience of coming to a real-time engine in the first place — those early, heady days of dragging assets into a viewport, fully lit and shaded, maybe even animating before your eyes straight out of the gate — the instant gratification isn’t just fun, it’s inspiring.

It turns out that kind of inspiration comes with a price tag. Using UE4’s GI I was getting 80 fps reliably from the camera view seen above — in UE5 with Lumen on the same machine, that cuts in half to 40 fps.

So the expense is clearly large enough that one can’t just pay it out of one’s frametime budget without considering where else in the render thread to claw some savings back from. And even then, even an optimized Lumen setup isn’t a Setup For All Systems — even Fortnite, Epic’s love letter to dynamic gameplay/lighting/everything, falls back to traditional GI solutions of UE4 yesteryear on lower-end PCs, and needless to say downshifts to even cheaper methods than that on mobile hardware. Fortnite aside, UE5 appears set up by default to switch Lumen off entirely once the engine’s scalability settings are set to Medium or lower.

Nanite

Epic’s new geometry streaming solution, Nanite, is every bit to geometry what Lumen is to lighting — it takes a workflow that should have never been possible, and makes it as simple as checking on a box (on every mesh in your project, but hey — you can do it as a bulk action!).

The official Unreal Engine Youtube page has spent the better part of the last year posting content clearly and visually explaining what Nanite is and how it works, so I won’t attempt to do that here. I’ll just throw out what seems to me to be the key takeaway, the paradigm shift that is at the heart of Nanite — that in the Old Days we had to load all of an object into the scene to render any part of it, and that the whole object had to be one consistent level of detail across the entirety of it, which meant there was a ton of workflow overhead making sure objects weren’t too heavy, and that when they were far away they were even less heavy, and at what point the game should switch out the close-up version for the far away version, and how to hide that visual “pop” from the player when it happened, and so forth, and in the Nanite days the complexity of the object in the scene (and in memory) is decoupled from the mere fact of its presence in the scene, and is instead linked to screen space/resolution: the engine will afford an object a dynamic amount of triangles based on its size in and proximity to the screen. Much has been made about how this unlocks the ability to utilize meshes in your scenes with insane, “film-grade” triangle counts, and that is clearly technically true. But just because you can…should you?

Probably not.

I will say that the Nanite developers have clearly anticipated the side effect this new power will have in the hands of developers: insane spikes in disk storage requirements for games in an age where the install footprints for AAA titles already seem to be getting away from us. To that end, they have coupled the Nanite format with some seriously impressive data compression — just by migrating my pre-existing, last-gen game res assets to Nanite, my built game footprints went from 5.7 GB on disk to 3.3 GB. That’s over 45% lopped off just by migrating - wild stuff.

I get why Epic is leaning into the whole “just think about how much geometry you can shove into your scenes now” angle when they market this stuff, especially to the extent that the industries most immediately poised to take full use of this tech today are not game studios (think film houses and architecture firms, who can use Lumen/Nanite on known hardware they control), but “you don’t have to change anything about your art process or triangle budgets and you get huge disk savings” seems like a whole other win one can reach for, especially smaller indie studios who benefit the most from under-the-hood optimizations they can adopt more or less “for free.”

In any event — this clearly unlocks a lot of artistic freedom for teams who found their art style limited by mesh resolution, but will just get anyone who thinks they can start pumping out messy, needlessly-dense geometry into trouble — both with their own computer and the hard drives of their customers! There’s definitely a computational hit one adopts by taking on Nanite too, and while I haven’t jumped in too deep into the stats it feels small compared to Lumen, and Epic’s hardware recommendations for Nanite basically include any Nvidia/AMD GPU released in the last seven years (i.e. older cards than you were probably planning on supporting anyway). Interestingly, they make no mention of SSDs one way or the other — it’s hard to imagine Nanite dynamically loading geometry off of spinning rust being a slick experience, though. Something to test, I suppose.

Virtual Shadow Maps

VSMs are Epic’s new method for shadow mapping. They are fairly thought of as the Yin to Nanite’s Yang — they seek to offer a simpler, unified path that replaces a variety of shadowing methods from days past that a developer might dial in and transition between based on distance from camera and other factors. Also, much in the way Nanite enables the use of high-fidelity meshes in-scene, VSMs are meant to increase shadow resolution in-kind.

This all looks wonderful and comes with expense — are you sensing a theme? The VSM system tries to minimize the amount of work needed to generate these maps per-frame by caching anything it can from frame-to-frame, but portions of the cache can be invalidated, namely by dynamic movement of an object. This could be a character, a moving prop, or — and this is particularly relevant for games that are, say — ahem — set in the middle of a forest, like mine — wind moving through foliage.

Well crap. The whole dang scene is foliage.

For the moment I’ve disabled World Position Offset (the material property that is traditionally used to fake wind movement in plants, among other things) on all plants in my scene, and am planning to re-introduce the “hit” of that in a future build based on proximity to camera.

The long and short of it is — if you’re going to use Nanite, you’re going to use VSMs too. De-coupling them is probably technically possible, but one gets the sense Epic never envisioned such a thing. Which means, if you’re planning on supporting platforms that you decide Nanite isn’t a fit for, then VSMs go from being that elegant, unified shadowing path to afeature for high-end systems that you still need to support fallbacks for using all of the Old Ways.

Worth It?

All this seems to suggest that, if you are a game dev looking to attract the General Audience out there in hardware terms, the promise of Lumen/Nanite/VSM as a streamlined, unified path for simpler lighting and asset authoring workflows, free of lightmaps and normal baking and LOD tuning and cascading shadow map solutions based on distance from the camera, and likely a suite of other workflows I’m forgetting, isn’t here yet. Implementing these tools now yields incredible visual outcomes, but at this point only serve to add to the complexity of your workflows, not take away from them. Until hardware catches up out in the real world, which will happen but hasn’t yet, they are yet another layer of tech from which fallbacks for lower-end devices must still be painstakingly made, monitored, and maintained. One only has to spend twenty minutes perusing Epic’s GDC material from this year on Youtube to find plenty of medium-to-large studios who have clearly decided the price of this is worth paying — and to the extent that competing in the market on that scale is, rightly or wrongly, a graphical arms race, that makes some amount of sense. But it’s a high price for an indie dev, especially for someone like me who’s still learning the ropes.

Maybe this price is worth paying to you. Maybe it’s not. It would depend on the nature of your project, and your personal goals for the development of your studio and/or your own skillsets.

As for this game? I’m still a bit tentative, but have decided to press on in the Land of Nanite (and Lumen, and the rest of it) for now. I’m likely another couple of years from being done with this game and Epic seems to have a number of optimizations on the horizon that might help pay down some of the cost of taking this tech onboard: 5.2 should bring optimized code paths for all of this tech, including reflections in Lumen, geometry streaming from disk in Nanite, and for shadow maps in scenes with many local lights (which my project doesn’t currently have, but will need before it’s all said and done). Over that time, the hardware situation out there in the world should trend toward more and more next-gen GPUs and faster SSDs in rigs, as it always has. By the time the day comes to publish this game for real, hopefully it will land right in the intersection of those two arcs.

And if it doesn’t? I’ll just have to optimize some LODs and lighting setups for fallback. More articles to come on that front, just as soon as I wrap my head around some good practices.

Looking Ahead

In May, it’s back to implementing the basics of the puzzle gameplay I discussed in March's devlog. Between dev sessions (and baby bottles), I’ll be shoring up my server’s config to lay the foundation for migrating my code repos to Gitea — in May, that will mean setting up more foundational portions of the system — SSH access, time syncing, firewall rules — in Ansible to simplify/automate some of the maintenance going forward. Only two hands around here, after all…these computers need to start pulling some of their own weight.

See you in a month!

-Tyler

Files

Get aviary[0]

aviary[0]

Untitled first project, releasing as it is developed.

| Status | In development |

| Author | The Aviary |

| Genre | Adventure |

| Tags | First-Person, Indie, Singleplayer, Unreal Engine, Walking simulator |

| Languages | English |

More posts

- June 2023 Update: Best UE5/Niagara Tutorial On the WebJul 01, 2023

- v0.5.0 is Live! Puzzle PrototypingJun 01, 2023

- March Update: Stories and SwaddlesApr 01, 2023

- January Update: v0.3.1 Waypoint PolishJan 31, 2023

- December(-ish) update: v0.3.0Jan 11, 2023

- November 2022 update - v0.2.0 is live!Dec 07, 2022

- Patch v0.1.1 is liveOct 25, 2022

![aviary[0]](https://img.itch.zone/aW1nLzEwMzUwNTQ4LmpwZw==/original/oC6hXu.jpg)

Leave a comment

Log in with itch.io to leave a comment.